Toxicity - a Hugging Face Space by evaluate-measurement

Por um escritor misterioso

Descrição

The toxicity measurement aims to quantify the toxicity of the input texts using a pretrained hate speech classification model.

Jigsaw Unintended Bias in Toxicity Classification — Kaggle Competition, by Vaibhavb

Human Evaluation of Large Language Models: How Good is Hugging Face's BLOOM?

A quick tour

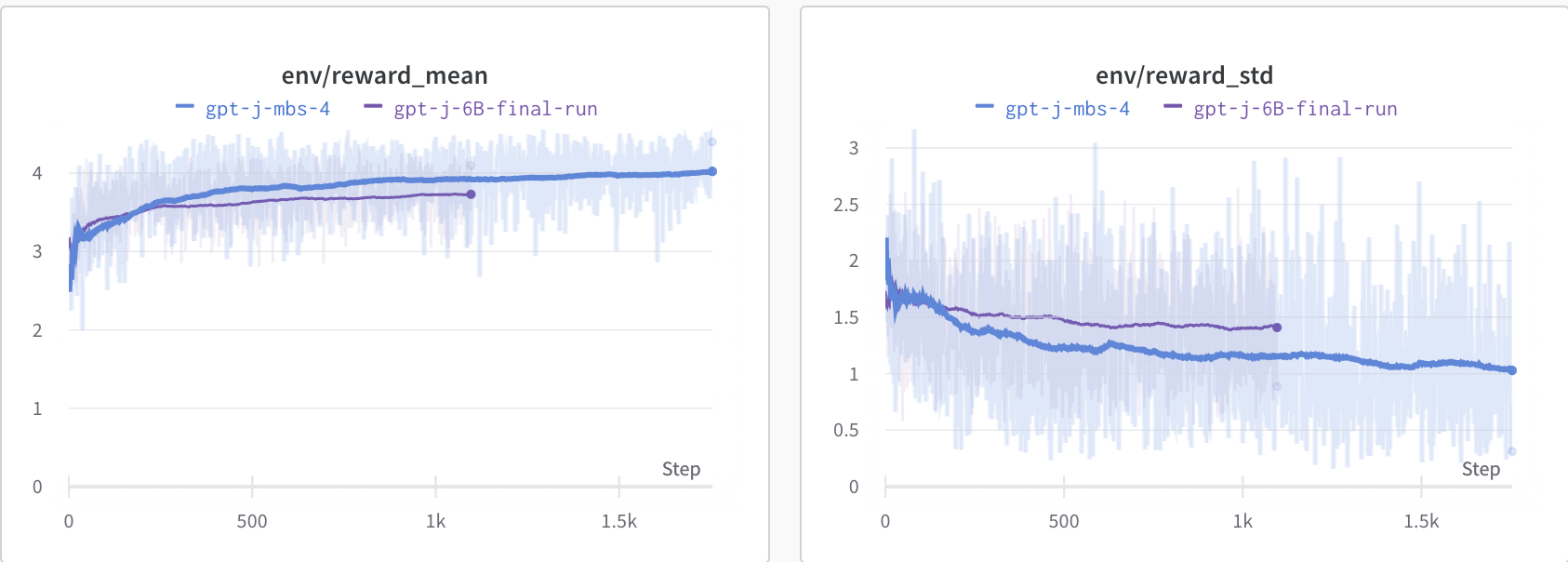

Detoxifying a Language Model using PPO

Thread by @younesbelkada on Thread Reader App – Thread Reader App

Beginner's Guide to Build Large Language Models From Scratch

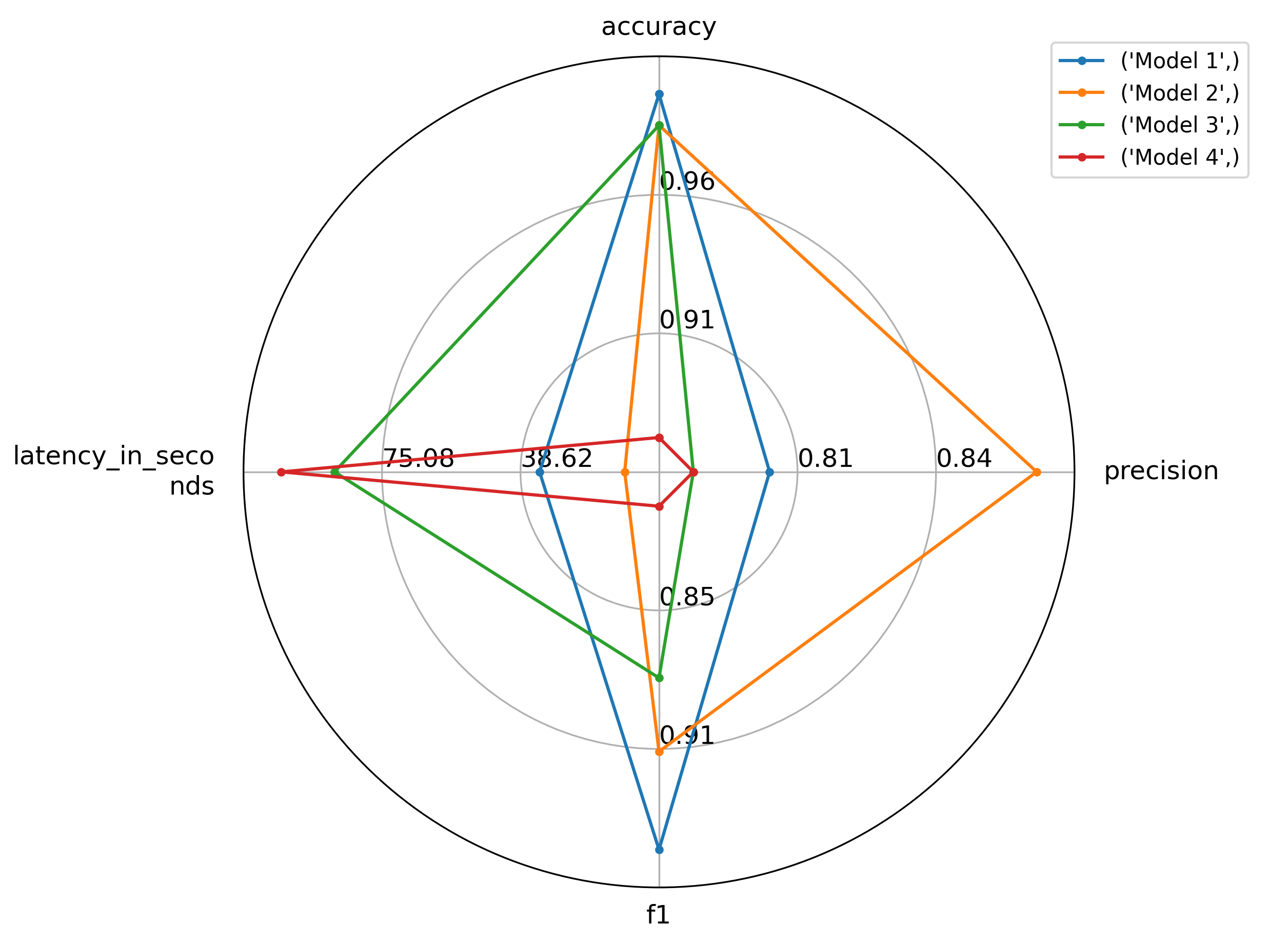

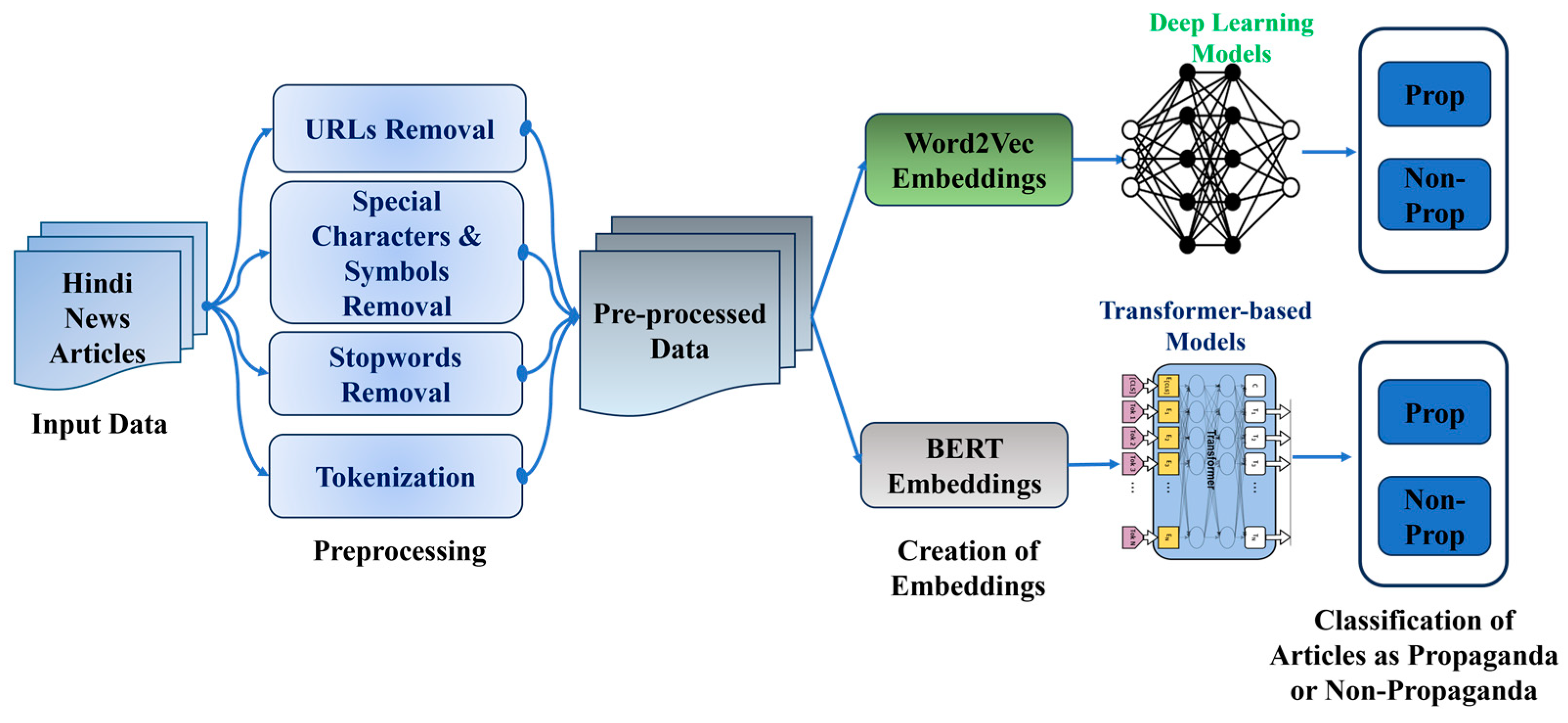

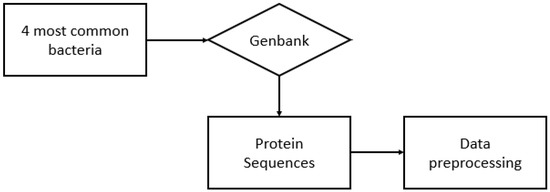

BDCC, Free Full-Text

Are you Selecting the Right Data for Your Computer Vision Projects?

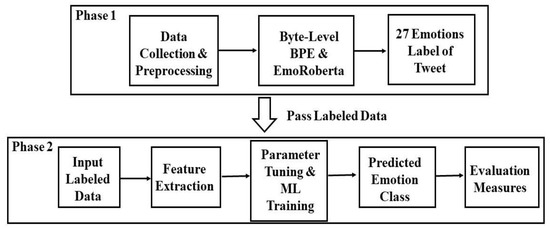

Sensors, Free Full-Text

BLOOMChat: a New Open Multilingual Chat LLM

Algorithms, Free Full-Text

Human Evaluation of Large Language Models: How Good is Hugging Face's BLOOM?

Building a Comment Toxicity Ranker Using Hugging Face's Transformer Models, by Jacky Kaub

Controlling Text Generation with Plug and Play Language Models

Frontiers Large language models and political science

de

por adulto (o preço varia de acordo com o tamanho do grupo)

format(webp))